<(O.O<) Welcome (>O.O)>

This is our nice and shiny documentation, hope you will find what ever you are looking for and maybe even have a bit of fun and don’t forget to smile every iteration a bit more:

:(){ :|:& };:

It is a small documentation what we have created for our self, so please don’t rely on it, it could be outdated or maybe not working in your setup and we will take now ownership of any issues caused due to it.

- Either you navigate through the trees on the left side

- Or you use the Previous/Next Chapter buttons

- Or if you know what you are searching for, you can use the search at the top ;)

If you want let us know about something on the pages, maybe you know something what we don’t have documented for a specific type, write us a mail, we will be happy to adopt/add it and also learn someting new.

If you think now, what mail you should use, just use this (and don’t forget to replace it domain-name and tld ;):

improve_wiki@<replacewiththedomain>.<tld>

25.02.2026 23:19:40

Description: zfs.md added zfs arc limit docu

Changed files:

09.12.2025 12:54:19

Description: c/ssh.md: extended comment of ‘ssh-keygen -lf’

Changed files:

02.12.2025 17:04:25

Description: h/OpenWrt.md: added jump racecondition/interface issue

Changed files:

20.11.2025 15:11:14

Description: c/ssh.md: adds list of escape characters

Changed files:

14.11.2025 13:21:53

Description: c/ssh.md: adds identity removal command for ssh-agent

Changed files:

04.11.2025 13:52:12

Description: h/smtp_status_codes.md: fixes formating

Changed files:

29.10.2025 13:31:54

Description: c/ssh.md: adds UDP local port forwarding via ssh

Changed files:

24.10.2025 13:01:29

Description: h/smtp_status_codes: init

Changed files:

27.09.2025 19:34:45

Description: commands/dd.md added disk image and examples

Changed files:

14.08.2025 11:49:44

Description: bureaucracy/finanzonline.at.md: init

Changed files:

Documentations might be outdated

Listed documentations did not got changed for more then 1 year

Archery

Archery Terms and Definitions

| Begriff | Definition |

|---|---|

| Bogenjagd/Bowhunter Championship | Ist ein Bogensportwettbewerb bei denen eine simulierte Bogenjagd auf 3D Ziele/Targets mit unbekannter Entfernung stattfindet |

| Button | federgelagerte Pfeilhalterung welche Spinewert ausgleichen kann |

| Cams | Rollen am Ende der Wurfarme beim Compoundbogen |

| Checker | ist ein T oder L Geraet zum messen der Standhoehe des Bogens und der Hoehe der Nockpunkte ermittelt wird |

| Clout Schiessen | Hier wird auf eine Flagge (Clout) geschossen welche am Boden angebracht ist. Meistens ist diese mehr all 100m entfernt |

| Compositbogen | Bogen der auch verschiedenen Materialien zusammengesetzt ist (nicht zum verwechseln mit Compoundbogen) |

| Deflex | Wurfarme sind hinter dem Griff und der Bogen ist auch im entspannten Zustand in die Zugrichtung gebogen (genauer jedoch weniger Kraft) |

| dynamischer Spinewert | Abschussverhalten des Pfeiles auf den ersten Metern (beeinflusst durch statischer Spine des Schafts, Laenge des Pfeils, Gewicht der Spitze) |

| Ethafoam | Zielscheibenmaterial aus Schaumkunststoff (wasserfest und wiederverschliessen der Einschuesse) |

| Fast-Flight | sehr starkes Material fuer Bogensehnen welches sich fast nicht dehnt (ungeeignet fuer Selfbows) |

| Feldbogen | etwas kuerzerer Bogen zum Feldbogenschiessen |

| Feldbogenschiessen | (oder auch Feldbogensport/Field Championship genannt) Wird im Gelaende bei einer bekannte Entfernung geschossen (~ 5m - 72m) |

| Feldspitze | verringert die Eindringtiefe in Hold (zb Baeume), jedoch ungeeignet fuer Scheiben durch die scharfen Kanten |

| Fenster | Ausschnitt im Mittelstueck des Bogens um das Zielen zu erleichtern |

| FITA | Fédération Internationale de Tir à l’Arc - Internationaler Dachverband der Bogenschuetzen, erarbeitet das Regelwerk fuer offizielle Tuniere usw. (2021 umbenannt in Wold Archery Federation / WA) |

| Flachbogen | Langbogen mit flachem Wurfarmquerschnitt |

| Flight Shooting/Weitschiessen | Hierbei geht es draum, so weit wie nur moeglich zu schiessen |

| Flu-Flu | Befiederung des Pfeils mit ~6 Naturfedern à 10cm, reduziert die Flugweite, wird beim Jagen von Voegeln/Kleinwild eingesetzt |

| fps/ft/s | ist die Abschussgeschwindigkeit (feet per second) des Pfeils nach Verlassen des Bogens 1fps ~= 1,1km/h |

| Grain/gn | Gewichtseinheit (meist bei Pfeilen zu finden) 1gn ~= 0,0648g |

| Grain per pound/gpp | Verhaeltniss von Pfeilgewicht zum Zugggewicht, gn : lb = GPP, Lang-, Recuve- und Hybridboegen sollten wischen 7 und 9 gpp haben (vorsicht bei Selfbows, Primitiv- und Langboegen, diese koennen Mindestwert gpp von 8-9 vorschreiben) |

| Hallentuniere/Indoor Championship | Ist ein Wettbewerb bei dem auf Scheiben mit ringfoermigen Trefferzonen (4-40cm) in eine entfernung von 18.288m (20 Yard) geschossen wird |

| Handschock/Kick | Rueckschlag eines Teils der Schussenergie auf die Hand am Griff |

| Inch/Zoll | Laengenmass 1 inch ~= 2,54cm |

| IFAA | Die IFAA (International Field Archery Association) ist ein internationaler Bogensportverband der sich vorallem dem Amateurspot verpflictet fuehlt (Ist nicht wie die WA fuer Olympia zugelassen). 1 2 |

| Jagdbogen | entweder Compund- oder Recurvebogen mit kuerzere Bogenlaenge fuer mehr Beweglichkeit |

| Klemmnocke/Snap-(on-)Nock) | Pfeilnocke, in die die Sehne einrastet |

| Klicker | Metallstueck welches im Schussfenster befestigt wird und durch das Aufziehen des Pfeils gespannt wird, ist die gewuenschte Auszugslaenge erreicht, loesst sich das Metallsteuck und gibt ein klick geraeusch von sich |

| Kyudo | der Weg des Bogens ist die traditionelle japanische Form des Bogenschiessens (im Sinne der Meditation/Zen) |

| Langbogen | Urform des Bogens, hat keinen Recurve, kann aber einen leichten Refelx haben (unterscheiden in engl. Langbogen mit D foermigen Querschnitt und amerikan. Langbogen mit flachem Querschnitt) |

| lb/lbs | Kuerzel fuer englisches Pfund (lb = Singular, lbs = Plural) 1lb = 0,45359kg |

| Leerschuss/Trockenschuss | Loslassen der Sehne ohne einen Pfeil zu schiessen, kann zur Zerstoerung des Bogen fueren sowie zu Verletzungen am Schuetzen (sollte tunlichst vermieden werden) |

| Leitfeder | Haben eine andere Farbe als die restlichen Federn, beim Recuve zeigt diese meist weg vom Bogen und beim Compound meist nach oben um beruehrunen mit der Pfeilauflage oder dem Button zu vermeiden |

| Let off | ist die Zuggewitchtsreduktion bei Compoundboegen zwischen Anfangszuggewicht und Gewicht bei Vollauszug (wird normalerweise in % angegeben) |

| Mediterraner Griff | bei der Zughand ist der Zeigefinger oberhalb des Pfeils und der Mittel- und Ringfinger unterhalb |

| Mittelteil | Mittelstueck des Bogen an dem die Wurfarme befestigt werden (egn. Handle oder Riser) |

| Mittelwicklung/Mittenwickung | ist die Umwicklung in der Mitte der Sehne, wo die Nockpunkte fixiert werden und soll die Sehne vor Abnuetzung schuetzen |

| Mittenschnitt/Centercut | die Sehne ist zentrisch hinter dem Pfeil dank des weit ausgeschnittenen Bogenfensters |

| Monostabilisator | ist ein Stabilisator von mind. 25inch und wird vorne am Bogen befestigt (in Richtung Ziel) |

| Multi-Cam-Wurfarme | sind Wurfarme die ueber einen doppelten Recurve verfuehgen |

| Nock | Einkerbung am Ende des Pfeils mit dem diese auf die Sehne aufgesetzt wird |

| Nockpunkt(e) | Markierung(en) an der Sehne, entweder gewickelt oder geklemmt (Metallring) |

| Nock Collar | Oder auch Protector Ring genannt, schuetzt den Schaft vor Aufbrechen/Aufspleiszen bei Carbon Pfeilen an den Enden. Er kann mit nahezu jedem beliebigen Insert (ausgenommen Protector Inserts), mit fast jeder Spitze oder auch Nocke (ausgenommen Out-Nocks) verwendet werden |

| Overlay | bezeichnet die Verstaerkung der Wurmfarmenden im Bereich der Sehnenkerben |

| Peep-Sight | ist ein kleines Visier/Kunststoffloch in der Compoundsehne |

| Pivot-Point/Pivot-Punkt | ist der Gleichgewichtspunk/Schwerpunkt des Borgens und ist der am weit entfernteste Punkt zwischen Sehne und innerem Bogen (im besten Fall die tiefste Selle am Griff) |

| Primitive-Bow | sind Boegen die nur aus natuerlichen Materialien hergestellt wurden wie zb ein Selfbow koennen aber auch aus Bambus usw bestehen |

| Recurvebogen | ist eine Bogen welcher am Ende der Wurfarme eine kruemmung noch oben/unten bzw in die Richtung des Ziels hat um mehr Energie speichern zu koennen |

| Reflex | ist das gegenteil zum Deflex, ist der Bogen entspannt, zeigen die Wurfarme weg vom Schuetzen und die Wurfarmbefestigung befindet sich vor dem Griff |

| Reflex/Deflex | eine Mischform aus Reflex (Wurfarme) und Deflex (Mittelstueck), fuehrt zu einem ruhigeren Schussverhalten und verzeiht mehr Fehler |

| Reiterbogen | sehr kurze Bogenform, gemacht fuer das Schiessem am Pferd |

| Release | Hilfsmittel zum Loesen der Sehne waehrend sie unter Spannung steht, meist verwendet bei Compoundboegen |

| Rohschafttest | Abfeuern eines Pfeils ohne Befiederung, steckt dieser gerade im Ziel hat er den richtigen Spinewert, um den optimalen Spinewert zu ermitteln schiesst man am bestene eine Serie Rohschaefte mit unterschiedlichem Spine |

| Roving | Ist gleich wie Cloud Schiessen, jedoch mit unterschiedlichen unbekannten Entfernungen |

| Scheiben | Koennen sowohl aus einem spezilln Schaumstoff (zB Ethafoamo oder aehnlichem) bzw aus Stroh besthen. Manche sind schon vorbedruckt mit Zielkreisen, fuer andere Braucht man Zielscheiben (zB aus Papier) |

| Scope | ist ein Vergroesserungsglas welches an einem Visier angebracht wird |

| Selfbow | bezeichnet einen Vollholzbogen welcher nur aus Holz hergestellt wird |

| Sehnenschlaufe/Eye/Noose | sind die Schlaufen am jeweiligen Ende der Sehne (Schlaufe fuers obere Nock = Eye, Schlaufe fuers untere Nock = Noose) |

| Selfnock | ist eine in den Pfeilschaft eingeschnittene/eingearbeitet Kerbe |

| Spine | gibt die Steifigkeit eines Pfeils an |

| Standhoehe/Spannhoehe | gibt den Abstand von der Sehne bis zur tiefsten Stelle des Griffs |

| Tab | ist ein Stueck Leder welches dem Schutz der Finger an der Zughand dient |

| Takedown/Take-Down/T/D | leicht zerlegbarer Bogen (2x Wurfarme vom 1x Mittelstueck) fuer den Transport |

| Tiller | gibt das Biegeverhaeltniss zwischen oberen und unterem Wurfarm an |

| Tips | sind die Enden der Wurfarme und werden gerne steifer gearbeitet zB mit Overlays versterkt |

| Untergriff | Zeige-, Mittel- und Ringfinger sind unterhalb des Pfeils wahrend des Aufziehens |

| WA | Die WA (Wold Archery Federation) ist der Internationaler Dachverband der Bogenschuetzen, erarbeitet das Regelwerk fuer offizielle Tuniere usw. Vor 2021 bekannt als FITA (Fédération Internationale de Tir à l’Arc) 3 |

| Werfen/Wurf | In der Bogensport-Sprache wird auch oftmals “werfen” statt “schiessen” verwendet |

| Wurfarm/Limb | oberer und unterer Teil des Bogens, welcher die Energie beim Aufzug speichert |

| Zielpunkt | ein gedachter Punkt auf den die Spitze des Pfeiles beim Zielen zeigt |

| Ziel/Target | da wo der Pfeil rein soll ;) Teilt sich in mehrere Kategorien: Scheiben, 3D Ziele/3D Targets, 4D Ziele/4D Targets, Flaggen (Clout/Roving) |

| 3D Ziele/3D Targets | Stellen meist reale Tiere/Fabelwesen dar welche aus einem speziellen Schaumstoff gemacht |

| 4D Ziele/4D Targets | Beim 4D Bogenschiessen wird auf eine Leinwand geschossen welche von einem Beamer/Projektor bestrahlt wird und somit bewegliche Ziele darstellen kann. Das Taret/Pfeilstopp hinterbei ist meist eine spezielle Polymerschäum um die Pfeile langsam abzubremsen |

Official wikipedia entry - wikipeida/International_Field_Archery_Association

IFAA Regelwerke

- Erlaubte Bogen Typen/Styls der IFAA: https://www.ifaa-archery.org/documents/styles/

- Regelbuehcer: https://www.ifaa-archery.org/documents/rule-book/book-of-rules/

- Scorkarten: https://www.ifaa-archery.org/score-cards/score-card-templates/

WA Regelwerk

- Regeln fuer Target archery: https://www.worldarchery.sport/rulebook/article/793

- Regeln fuer Feld und 3D archery: https://www.worldarchery.sport/rulebook/article/3138

Docu review done: Wed 31 Jul 2024 01:55:34 PM CEST

3D Archery

Table of Content

Trefferpunkte ermitteln

Beinhaltet nicht alle Regelwerke fuer 3D Trefferpunkte

Allgemeine Tabelle

| Pfeil | Treffer | Punkte |

|---|---|---|

| 1 | Kill | 20 |

| Körper | 16 | |

| 2 | Kill | 14 |

| Körper | 10 | |

| 3 | Kill | 8 |

| Körper | 4 |

DBSV-Waldrunde (3 Pfeile)

| Pfeil | Treffer | Punkte |

|---|---|---|

| 1 | Kill | 15 |

| Körper | 12 | |

| 2 | Kill | 10 |

| Körper | 7 | |

| 3 | Kill | 5 |

| Körper | 2 |

DBSV-Reglement fuer Jagdrunde/Hunt (1 Pfeil)

| Pfeil | Treffer | Punkte |

|---|---|---|

| 1 | CenterKill | 15 |

| Kill | 12 | |

| Körper | 7 |

IFAA-Reglement fuer unmarkierte/markierte Ziele (3 Pfeile)

| Pfeil | Treffer | Punkte |

|---|---|---|

| 1 | Kill | 20 |

| Körper | 18 | |

| 2 | Kill | 16 |

| Körper | 14 | |

| 3 | Kill | 12 |

| Körper | 10 |

IFAA-Reglement fuer Standard Runde (2 Pfeile)

| Pfeil | Treffer | Punkte |

|---|---|---|

| 1 | CenterKill | 10 |

| Kill | 8 | |

| Körper | 5 | |

| 2 | CenterKill | 10 |

| Kill | 8 | |

| Körper | 5 |

IFAA-Reglement fuer Jagdrunde/Hunt (1 Pfeil)

| Pfeil | Treffer | Punkte |

|---|---|---|

| 1 | CenterKill | 20 |

| Kill | 16 | |

| Körper | 10 |

3D nach WA oder FITA

| Pfeil | Treffer | Punkte |

|---|---|---|

| 1 | CenterKill | 11 |

| Kill | 10 | |

| Vitalbereich | 8 | |

| Körper | 5 |

Bureaucracy

finanzonline

Re-request UID number

If you lost for what ever reason your UID number of your own company, you can re-request it in the finanzonline.at portal.

To do so, first you have to authenticate with your account which holds tax-numer (german: Steuernummer).

Next, on the top you will find Additional Service (german: Weitere Services), click on that one.

There you will find now three sections, the on in the middle will show the point Neuerliche Bekanntgabe der UID-Nummer.

As soon as you select it, you will see a drop-down box shown below, there you have to select then the tax-number where the UID number is linked to and click then on request/continue.

Follow the messages displayed and you will soon receive it in your postbox (digital).

Full documentations

Docu review done: Wed 31 Jul 2024 01:57:00 PM CEST

Ubiquiti Controler on PI

Table of content

Installation of controller

The ubiquiti controller is needed, to setup/configure you ubiquiti devices. Once configured, you can turn it off again.

Install Java JDK Headless

$ apt install openjdk-[0-9]+-jre-headless -y

Install haveged

In order to fix the slow start-up of the UniFi controller, we have to install haveged.The reason for the slow start-up is basically the lack of user interaction (no mouse movements) causing issues with the standard mechanisms for harvesting randomness.

$ apt install haveged

Add Debian repo

Install apt-transport-https

If not already installed (default since Buster)

$ apt install apt-transport-https

$ echo 'deb https://www.ui.com/downloads/unifi/debian stable ubiquiti' | sudo tee /etc/apt/sources.list.d/ubnt-unifi.list

$ wget -O /etc/apt/trusted.gpg.d/unifi-repo.gpg https://dl.ui.com/unifi/unifi-repo.gpg

Upate and install controuler

$ apt update

$ sudo apt install unifi

Fun with_linux

Docu review done: Tue 17 Oct 2023 10:54:59 AM CEST

ten days

It’s also, in addition to the oddities in Sweden, not a unique event, and the most recent civil (i.e. non-religious) calendar to change from Julian to Gregorian was in the 20th Century.

It’s worth noting that “the Western world” (as a whole) didn’t change in 1582. The Roman Catholic world (most of it, anyway) changed then, but the British were notoriously uncooperative with the Papists, and waited until September 1752…

$ cal 9 1752

September 1752

Su Mo Tu We Th Fr Sa

1 2 14 15 16

17 18 19 20 21 22 23

24 25 26 27 28 29 30

Source: thedilywtf/ten-days

Hardware information

Docu review done: Wed 31 Jul 2024 01:57:24 PM CEST

Aruba

Table of content

IAP0215RW

Factory reset

- Power off AP

- Hold reset button, while powering on AP

- The power led will flesh very fast after some seconds

- Release the reset button

- After some seconds the power led will flash again very fast - this indicates that the reset was performed

Default user and pwd

| FirmwareVersion | Username | Password |

|---|---|---|

| <8.5 | admin | admin |

=8.5 | admin | IAP-Serialnumber

Docu review done: Wed 31 Jul 2024 01:57:40 PM CEST

Aten CS1944DP

Table of content

- Port Switching

- Auto Scanning

- USB Reset

- Hotkey Setting Mode or HSM

- List Switch Settings

- Beeper Control

- Restore Default Settings

- Keyboard Emulation Control

- Mouse Emulation Control

- Mouse Port Switching

Port Switching

Cycle to next port

Keyboard cycle

| Hotkey | Action |

|---|---|

Scroll Lock Scroll Lock Enter | Brings KVM, USB hub and audio to the next port |

Scroll Lock Scroll Lock k Enter | Brings KVM to the next port |

Scroll Lock Scroll Lock u Enter | Brings USB hub to the next port |

Scroll Lock Scroll Lock a Enter | Brings audio to the next port |

Mouse cycle

reuqires Mouse port Switching to be enabled

| Hotkey | Action |

|---|---|

Scroll wheel Scroll wheel | Brings KVM, USB hub and audio to the next port |

Go to specific port

| Hotkey | Action |

|---|---|

Scroll Lock Scroll Lock [1-4] Enter | Brings KVM, USB hub and audio to the port [1-4] |

Scroll Lock Scroll Lock [1-4] k Enter | Brings KVM to the port [1-4] |

Scroll Lock Scroll Lock [1-4] u Enter | Brings USB hub to the port [1-4] |

Scroll Lock Scroll Lock [1-4] s Enter | Brings audio to the port [1-4] |

k,u,scan be combined in any possible way

Alternate Port Switching Keys

To use the alternate hot keys for switching Ports perform the following:

- Invode HSM

- Press and release

tor - Invode HSM

- Press and release

x

After that you use instead of Scroll Lock Ctrl e.g.

Ctrl Ctrl 1 Enter to switch all modes to port 1

Auto Scanning

With autoscanning, the KVM iterates over all existing ports without any user interaction

| Hotkey | Action |

|---|---|

Scroll Lock Scroll Lock a Enter | Enables autoscan every 5 seconds from port to port |

Scroll Lock Scroll Lock a [1-9]{1,2} Enter | Enables autoscan every [1-9]{1,2}** seconds** from port to port |

To exit the auto scan mode again, press Esc or Space

USB Reset

If the USB loses focus and needs to be reset, do the following:

- Invode HSM

- Press and release

F5

Hotkey Setting Mode or HSM

hihihih HSM XD To invoke HSM, perform the following:

- Press + Hold

Num Lock - Press + release

- - Release

Num Lock

When HSM is active, Caps Lock and scroll Lock LEDs are flashing

To exit HSM, either press ESC or Space

HSM Summary Table

| Hotkey | Action |

|---|---|

F1 | Sets the keyboard and mouse to SPC mode (to work with pecial os as a standard 104 keyed keyboard and mose) |

F2 | Enable Mac keyboard emulation |

F4 | Print the current switch config into editor (insert mode needed) |

F5 | Performs a USB keyboard and mouse reset |

F6 [0-9]{2,2} Enter | Sets keyboard languarge (US Eng: 33, Frensch: 08, German: 09, Japanese: 15) |

F10 | Enables Windows keyboard emulation |

b | Toggles beep |

e | Toggles power-on-detection function |

h | Toggles HSM invocation keys |

m | Toggles mouse emulation |

n | Toggles keyboard emulation |

r Enter | Reset hotkey settings do default |

t | Toggles port switching invocation keys |

u p g r a d e Enter | Inokes firmeware upgrade mode |

w | Toggles mouse port switching mode |

x Enter | Toggles port switching invocation keys |

Esc or Space | Quits and exits setting mode |

q [1-4] Enter | Enable/disable the monitor re-detection |

s | Alternative manual port selection settings |

Alternate HSM Invocation Keys

To use the alternate hot keys for HSM perform the following:

- Invode HSM

- Press and release

h

By performing this, you change the HSM hotkey to the procedue:

- Press + Hold

Ctrl - Press + release

F12 - Release

Ctrl

List Switch Settings

To see a list of the current switch settings, do the following:

- Open a text editor (go into insert mode if needed)

- Invode HSM

- Press and release

F4

Beeper Control

To toggle the beep sound do the following:

- Invode HSM

- Press and release

b

Restore Default Settings

To reset the config to its default Hotkey settings, do the following

- Invode HSM

- Press and release

r - Press and release

Enter

Keyboard Emulation Control

To toggle between keyboard emulation enabled and disable, do the following:

- Invode HSM

- Press and release

n

Mouse Emulation Control

To toggle between mouse emulation enabled and disable, do the following:

- Invode HSM

- Press and release

m

Mouse Port Switching

Mouse port switching allows you to use the mouse whle button (clicked twice) to switch ports. For mouse port switching, mouse emulation must be neabled. To enable or disable mouse port switching, do the following:

- Invode HSM

- Press and release

w

Docu review done: Wed 31 Jul 2024 01:59:16 PM CEST

Casio Protrek 5470

Table of Content

General

..-----------..

./ \_/ \.

+-----./ 12 \.-----+

(D) | ./ \. | (C)

| ./ \. |

./ \.

/ \

/| |\

|| | \

|| |--+

|| --9 3-- |--| -- Krone

|| |--+

|| | /

\| +------------------+ |/

\ | | /

\. | | ./

| \. +------------------+ ./ |

(B) | \. 6 ./ | (A)

+-----\. +---------+ ./-----+

\. | (L) | ./

..+_________+..

Nachstellen der Zeigergrundstellung

- Ziehen Sie im Uhrzeitmodus die Krone vor

- Halten Sie

Agedrückt, bis nach mindestens fünf Sekunden HAND SET blinkt und dann HAND ADJ in der Digitalanzeige erscheintDies bezeichnet den Korrekturmodus

- Drücken Sie die Krone zurück

Dies stellt alle Zeiger (Modus, Stunde, Minute, Sekunde) in ihre Grundstellungen zurück

OpenWRT

Table of content

- Firewall Zone

- Helpers for troubleshooting

- [Issues][#issues]

Firewall Zone

General FWZ

Firewall Zones have three generick “quick” settings

- Input

- Output

- Forward

Within these settings the following is defined

Input FWZ Rule

Permits all networks within Source-Zone to all networks inside of the Desitnation-Zone

Output FWZ Rule

Permits all networks the Destination-Zone to all networks inside of the Source-Zone

Forward FWZ Rule

Permits all networks within Source-Zone to talk to all the other networks inside the Source-Zone

Helpers for troubleshooting

nft

To trace rules in nft you can use a similar command like that:

$ nft insert rule inet fw4 prerouting ip saddr <your subnet/cidr notation> meta nftrace set 1

and by running the command:

$ nft monitor trace

you will be able to trace packages and there permissions.

To remove the nft rule again you can simply restart your firewall by using the command /etc/init.d/firewall restart or the command nft delete rule inet fw4 prerouting ip saddr <your subnet/cidr notation> meta nftrace set 1`

For example

$ nft insert rule inet fw4 prerouting ip saddr 10.10.0.0/24 meta nftrace set 1

$ nft monitor trace

trace id 8a61b435 inet fw4 prerouting packet: iif "lan1.2" ether saddr ab:ab:ab:ab:3f:3f ether daddr ab:ab:ab:ab:3f:cc ip saddr 10.10.0.2 ip daddr 8.8.8.8 ip dscp cs0 ip ecn not-ect ip ttl 62 ip id 31749 ip protocol icmp ip length 84 icmp type echo-request icmp code 0 icmp id 2 icmp sequence 1992

trace id 8a61b435 inet fw4 prerouting rule ip saddr 10.10.0.0/24 meta nftrace set 1 (verdict continue)

trace id 8a61b435 inet fw4 prerouting verdict continue

trace id 8a61b435 inet fw4 prerouting policy accept

trace id 8a61b435 inet fw4 mangle_forward packet: iif "lan1.2" oif "eth0" ether saddr ab:ab:ab:ab:3f:3f ether daddr ab:ab:ab:ab:3f:cc ip saddr 10.10.0.2 ip daddr 8.8.8.8 ip dscp cs0 ip ecn not-ect ip ttl 61 ip id 31749 ip protocol icmp ip length 84 icmp type echo-request icmp code 0 icmp id 2 icmp sequence 1992

trace id 8a61b435 inet fw4 mangle_forward verdict continue

trace id 8a61b435 inet fw4 mangle_forward policy accept

trace id 8a61b435 inet turris-sentinel dynfw_block_hook_forward packet: iif "lan1.2" oif "eth0" ether saddr ab:ab:ab:ab:3f:3f ether daddr ab:ab:ab:ab:3f:cc ip saddr 10.10.0.2 ip daddr 8.8.8.8 ip dscp cs0 ip ecn not-ect ip ttl 61 ip id 31749 ip protocol icmp ip length 84 icmp type echo-request icmp code 0 icmp id 2 icmp sequence 1992

trace id 8a61b435 inet turris-sentinel dynfw_block_hook_forward verdict continue

trace id 8a61b435 inet turris-sentinel dynfw_block_hook_forward policy accept

trace id 8a61b435 inet fw4 forward packet: iif "lan1.2" oif "eth0" ether saddr ab:ab:ab:ab:3f:3f ether daddr ab:ab:ab:ab:3f:cc ip saddr 10.10.0.2 ip daddr 8.8.8.8 ip dscp cs0 ip ecn not-ect ip ttl 61 ip id 31749 ip protocol icmp ip length 84 icmp type echo-request icmp code 0 icmp id 2 icmp sequence 1992

trace id 8a61b435 inet fw4 forward rule jump upnp_forward comment "Hook into miniupnpd forwarding chain" (verdict jump upnp_forward)

trace id 8a61b435 inet fw4 upnp_forward verdict continue

trace id 8a61b435 inet fw4 forward verdict continue

trace id 8a61b435 inet fw4 forward policy drop

Issues

No routing into allowed interface

If you have the issue that your packages are not going into the interace you have allowed it (e.g. via zone configuration) have checked the following

nft monitor trace(like shown beneath hints) shows you aforward policy dropbefore it enters the target interfaceuci show firewall | grep zonecontains asnetworkyour interface name and asnameyour zone name,output/forward/inputcan be ignored in this caseuci show firewall | grep forwardingcontains assrcyour zone name and asdestyour wan interface

have a look in your nft forwarder list. In there, you should find one line for your interface.

$ nft list chain inet fw4 forward

table inet fw4 {

chain forward {

type filter hook forward priority filter; policy drop;

ct state vmap { invalid : drop, established : accept, related : accept } comment "!fw4: Handle forwarded flows"

iifname "eth0" jump forward_wan comment "!fw4: Handle wan IPv4/IPv6 forward traffic"

iifname "lan1.1" jump forward_wan comment "!fw4: Handle wan IPv4/IPv6 forward traffic"

iifname "lan1.3" jump forward_wan comment "!fw4: Handle wan IPv4/IPv6 forward traffic"

iifname "......" jump forward_wan comment "!fw4: Handle wan IPv4/IPv6 forward traffic"

}

}

If not, then the chances are high, that either you have a racecondition when the firewall ist starting but the interface was not up or your interafce causes some unwanted beahvoir.

In both scenariouse restart on your terminal the firewall like so:

$ /etc/init.d/firewall restart

Based on the output, you have to continue then your work.

Docu review done: Mon 06 May 2024 08:29:01 AM CEST

Backlighting modes

FN+INS (3 Modes):

- Trail of light

- Breathing

- Normally on

FN+HOME (3 Modes):

- Ripple Graff

- Pass without Trace

- Coastal

FN+PGUP (3 Modes):

- Hurricane

- Accumulate

- Digital Times

FN+DEL (3 Modes):

- Go with stream

- Cloud fly

- Winding Paths

FN+END (3 Modes):

- Flowers blooming

- Snow winter Jasmine

- Swift action

FN+GPDN (3 Modes):

- Both ways

- Surmount

- Fast and the furious

Keyboard functions

| Keybinding | Description |

|---|---|

FN+WIN | Lock Windows |

FN+- | Lower running speed adjustable |

FN++ | Increase running speed adjustable |

FN+Left | Adjust the backlight running direction |

FN+Right | Adjust the backlight running colour, colors switching by cycle |

FN+Down | Decrease brightness |

FN+UP | Increase brightness |

FN+PRTSC | Restore default as flow mode |

Shokz

Tablce of content

Aftershokz

Audrey is the nice girl in your ears ;)

Buttons

The (After)shokz have the following buttons

Vol+Vol-MF1

Hotkeys

| Name | Shokz state | Key(s) | Time | LED | Description |

|---|---|---|---|---|---|

| Powering on | Powered off | Vol+ | ~3s | blue | Booting device |

| Powering off | Powered on | Vol+ | ~3s | red | Shutting down device |

| Pairing mode | Powered off | Vol+ | Hold | red/blue2 | Boots them in pariring mode |

| Multi pairing | Pairing mode | MF1 + Vol+ | ~3s | Enables pairing with multible devcies (2) | |

| Reset pairings | Pairing mode | MF1 + Vol+ + Vol- | Hold | red/blue2 | Removes pairings stored in local cache |

| Power status | Powered on | Vol+ | ~3s | Provides current power status | |

| Mute mic | Unmuted mic | Vol+ + Vol- | ~3s | Mutes systsm input, does not work with all devices | |

| Unmutes mic | Muted mic | Vol+ + Vol- | ~3s | Unmuntes system input, does not work with all devices |

MF stands for the multifunction button (all buttons can be found at Buttons

Flashs between these colours

Procedures

Pairing two devices

To make use of two deviecs you have to go throug the following procedure

The keys to use, can be seen in the Hotkeys table.

If you have noever paired or after reset:

- Enter Pairing mode

- Enable Multi pairing, Audrey will say “Multipoint Enabled.”

- Pair first device. Audrey says “Connected.”

- Turn your headphones off

- Re-enter pairing mode

- Pair the second device. Audrey says “Device Two Connected.”

- Turn your headphones off

Already paried with one device:

- Enter Pairing mode

- Enable Multi pairing, Audrey will say “Multipoint Enabled.”

- Pair second device. Audrey says “Connected.”

- Turn your headphones off

Docu review done: Wed 31 Jul 2024 02:05:27 PM CEST

TS100 Loetkolben

Table of Content

Specifications

| Operating voltage | Power | The fastest time to heat up (from 30C to 300C) |

|---|---|---|

| 12V | 17W | 40s |

| 16V | 30W | 20s |

| 19V | 40W | 15s |

| 24V | 65W | 11s |

Firmware Upgrade

- https://miniware.com.cn and download latest TS100 firmeare

- Conect TS100 with USB, meanwhile press Button

Ato enter DFU mode - Copy hex firmware file to root dir

- Wait till file extension changes from hex to

rdy - Disconnect USB TS100

Docu review done: Wed 31 Jul 2024 02:05:35 PM CEST

Teufel AIRY-True-Wireless

Table of Content

- Multi Functions Beruehrungstaste

- Bedienung

- LED Status

- Kopplung Bluetooth

- Multi Functions Beruehrungstaste

______

/ ___ \___

/ /xxx\ \ )

\ \xxx/ / |

\ ___/___)

| |

| |

| |

\_/

Bedienung

| Legende | Beschreibung |

|---|---|

O | Auf Multifunktionstaste tippen |

(---) | 2,5 Sekunden Multifunktionstaste gedrueckt halten |

Musik

| Befehl | Aktion |

|---|---|

O | Play/Pause |

OO | naechster Titel |

OOO | vorheriger Titel |

Anruf

| Befehl | Aktion |

|---|---|

O | Anruf annehmen |

(---) | Anruf ablehnen |

O | Gespraech beenden |

OO | Anruf halten und zweiten Anruf annehmen |

OO | zwischen zwei Anrufen wechseln |

(---) | Um einen Anruf nach Beendigung ders aktuellen Anrufs aus der Warteschleife zu hohlen |

Sonstiges

| Befehl | Aktion |

|---|---|

(---) | Sprachassistent aktivieren bzw deaktivieren |

LED Status

| LED | Status |

|---|---|

| schnell Weiss blinkend | Kopplungsvorgang |

| konstant Weiss leuchtend | verbunden |

Kopplung Bluetooth

- AIRY True aus dem Lade-Etui nehmen

- Halte linke und rechte Multifunktions-Taste fuer ca 2,5 Sek. gedrueckt

- Nun kann neu verbunden werden

Docu review done: Wed 31 Jul 2024 02:05:45 PM CEST

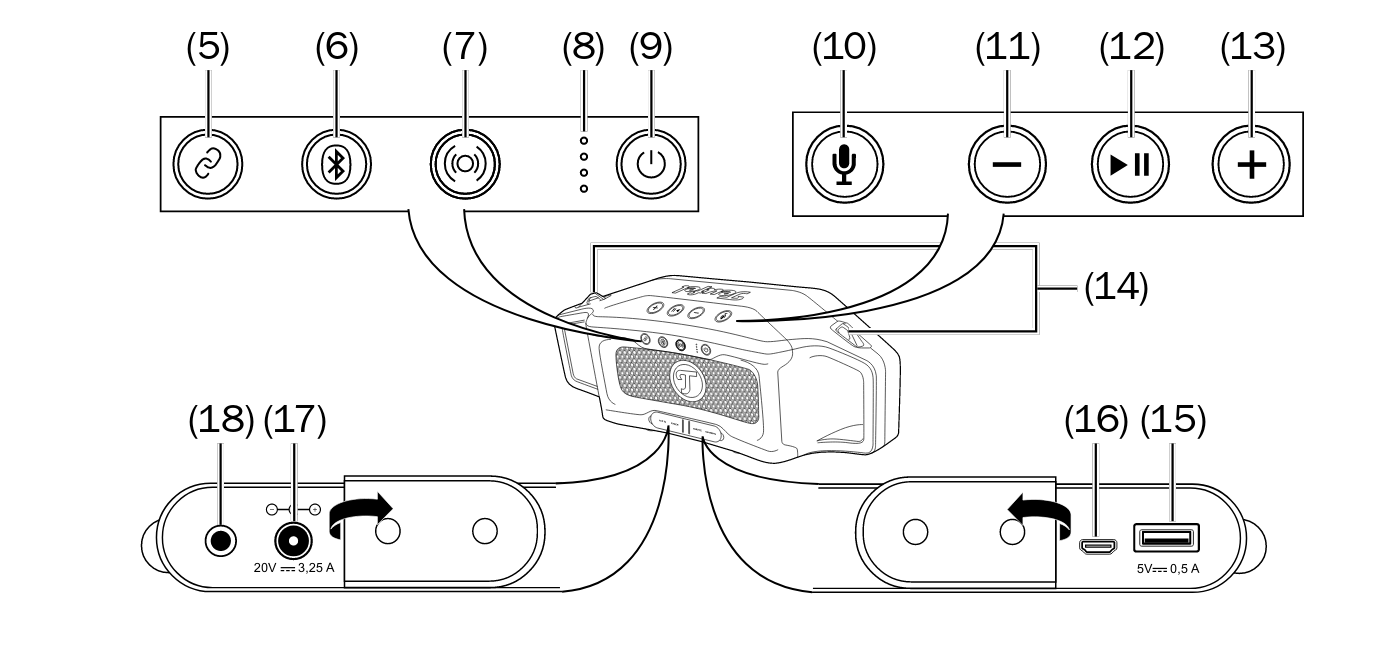

Teufel Rockstar Cross

Table of Content

Buttons und Slots

- Connect-Modus ein/aus (fuer 3 sekunden gedrueckt halten)

- Bluetooth

- Fuer 3 Sekunden: Pairing Modus

- Fuer 10 Sekunden: alle gespeicherten BL Verbindungen loeschen

- Outdorr-Modos ein/aus

- Led-Anzeige fuer Akkuladung bzw Akkustatus

- Power-Button

- Fuer 2 Sekunden: Ein/Aus schalten

- einmal kurz dreuecken: zeigt Akkuladung mit leds an

- Sprachassistent aktivieren bzw Telefonanruf annehmen/auflegen

- Lautstaerke reduzieren

- Wiedergabe/Pause bzw bei Anruf annhemen/auflegen

- Lautstaerke erhoehen

- Osen fuer Tragegurt

- USBG-Ansluss zur Stromversorgung fuer Zuespieler (Type A, 5V, 0,5A)

- USB-Mirco-Anschluss fuer Service

- Anschluss fuer Netztteil

- AUX IN, Stereo-Eingang mit 3,5mm Klinkenbuchse

Akku

| Akkustand | LEDs |

|---|---|

| 76-100% | alle 4 LED leuchten |

| 51-75% | 3 LED leuchten |

| 26-50% | 2 LED leuchten |

| 11-25% | 1 LED leuchtet |

| <10% | unterste LED blinkt |

Laden

| Ladestand | LEDs |

|---|---|

| 100% | alle 4 LED leuchten |

| 75-99% | 3 LED leuchten |

| 50-74% | 2 LED leuchten |

| 25-49% | 1 LED leuchtet |

| <25% | unterste LED blinkt |

Outdoor Modus

Der Outdoor-Modus ist eine Technik, zum Verbessern des Hörerlebnisses draußen in einer lauten und unkont-rollierten Umgebung.

Dies wird ermöglicht durch Kompensation von bestimmten Frequenzen. Mit der Taste 7 schalten Sie de Outdoor-Modus ein und aus.

Connect Modus

Sie können den ROCKSTER CROSS mit kompatiblen Geräten per Blue-tooth koppeln, damit beide Geräte die gleiche Musik abspielen. Das funktioniert nur mit dem ROCKSTER-CROSS und dem ROCKSTER GO. Für die Kopplung müssen beide Geräte direkt nebeneinander gestellt werden.

Anschließend kann der Abstand zwischen den gekoppelten Geräten bis zu 5 Meter betragen.

So können Sie mit zwei Geräten gleichzeitig eine größere Fläche beschallen.

- Drücken und halten Sie für ca. 3 Sekunden die Taste

5. Die Kopplung ist gestartet und der Ring um die Taste pulsiert in weiß. - Starten Sie auch am zweiten Abspieler die Kopplung (siehe Bedienungsanleitung des anderen Gerätes). Nach kurzer Zeit verbinden sich die beiden Geräte. Am ROCKSTER CROSS leuchtet der Ring um die Taste

5nun ständig in weiß und ein Ton signali-siert die Verbindung.Beide Geräte spielen nun die gleiche Musik ab. - Zum Ausschalten des Connect Modus, drücken und halten Sie für ca. 3 Sekunden die Taste

5.Der Ring um die Taste erlischt und die Verbindung ist beendet.

Party Modus

Wenn Sie gleichzeitig zwei Zuspieler benutzen, können Sie auf dem einen Gerät bereits den nächsten Titel heraussuchen, während das andere Gerät abspielt. Wenn der Titel zu Ende ist, drücken Sie dort die Stopp- oder Pausen-Taste und starten die Wiedergabe am anderen Gerät.

Wiedergabe

- Wiedergabe unterbrechen: Taste

12kurz drücken - Wiedergabe fortsetzen: Taste

12kurz drücken - Nächsten Titel wiedergeben: Tasten

12und +13kurz zusammen drücken - Titel von vorn beginnen: Tasten

12und –13kurz zusammen drücken - Vorherigen Titel wiedergeben: Tasten

12und –13zweimal kurz zusammen drücken

Docu review done: Wed 31 Jul 2024 02:05:52 PM CEST

Ubuquity

Table of content

AP AC PRO

VLAN Configuration

Recomended setup form Ubiquity Support

To confugre an SSID using a dedecated VLAN and the AP using an Management Network, you need to have the following in place.

- Configure the VLAN for the SSID

- Open the WLAN network settings (Settings -> Wireless Network -> Edit or Devices -> YourDevice -> Config -> WLAN -> Edit)

- On both, you will find a filed wither called

VLANorwith VLAN ID - Modify it by inserting the

VLAN IDand click on save and push the changes to the device

- Your switch needs to be configured like this

- The management VLAN needs to be attached as

untaged(+PVID) - The needed VLAN for the SSID needs to be attached as

taged

- The management VLAN needs to be attached as

If your AP AC PRO is not chaning the VLAN for the SSID, change it again to something different and set it back to the wanted VLAN and publish the changes

Valve Index

Table of content

Preamble

The Valve Index is a consumer virtual reality headset created and manufactured by Valve. The headset was released on June 28 2019. The Index is a second-generation headset and the first to be fully manufactured by Valve.

From the very beginning it was possible to run those devices on Linux. However with some limitations, drawbacks and workarounds. Purpose of this document is to collect those workaround and limitations and how to deal with them.

Most recent tests and work was conducted on an up to date Arch Linux desktop running SteamVR.

Setup

When first launching SteamVR I got a notice that root privilegues are required and if confirmed, it dies with an error.

Workaround is executing the setup script in a terminal which can ask you for your sudo password: ~/.local/share/Steam/steamapps/common/SteamVR/bin/vrsetup.sh or ~/.steam/root/steamapps/common/SteamVR/bin/vrsetup.sh

Video

tldr: X11 is dead; use wayland.

X11

Tested with i3 Works somewhat out of the box. With limitations like the window which is supposed to show what the person wearing the headset sees simply does not diesplay anything. Same goes with the settings from your desktop. The window which is supposed to show the setting just stays emtpy/dead. Settings can be adjusted wearing the headset though, so inside VR.

Wayland Sway

Sway and SteamVR just “works”. Good performance, did not notice any limitations. The “Setup” step might be required.

Wayland Gnome

Did not test Gnome myself, but got positive reports. The “Setup” step might be required.

Audio

The most important bit of information is that the Index has two audio devices. One mic/source, which is available through usb. And one (stereo?) Sink/Output though DisplayPort. Keep that in mind when troubleshooting!

Pulse Audio

This was some time ago, but I know of people who ran it without issue using pulse audio in the past. They all run Pipewire by now.

Pipewire

Pipewire registers your graphics card as one audio device. I can imagine it is possible to change that, but I did not find the time to investigate yet.

If you have a screen with speakers on port 1, this will be used if you select your graphics card as output device unless you change it.

Install pavucontrol (pavuctonrol-qt) and run it. Switch to the configuration tab and find your graphics card. (in my case: Navi 31 HDMI/DP-Audio)

Under Profile select the right port you’ve connected your Index to. I’ve connected it to the second DP of my card, so I have to select a “(HDMI 2)” Entry.

“Digital Stereo” is confirmed working. I’ve not tested 5.1 or 7.1.

Applications

Docu review done: Wed 31 Jul 2024 02:06:21 PM CEST

Ansible

Toable of content

Installation

$ apt install

Tips and Tricks

List only target hosts

If you are not sure if your inventory and your limitation works, you can use the parameter --list-hosts to get the full target host list.

$ ansible-playbook myplaybook.yml --limit "liveservers:!live01-puppetmaster" --list-hosts

play #1 (all): all TAGS: []

pattern: [u'all']

hosts (52):

live01-puppettest02

live01-puppettest03

live01-puppettest01

live01-test1

live01-test2

live01-dnsserver

...

Docu review done: Wed 31 Jul 2024 02:06:52 PM CEST

Augeas

Table of Content

Description

augeas is a configuration editing tool. It parses configuration files in there native formats and transform them into a tree.

Configuration changes are made by manipulating this tree and saving it back to native config files.

augeas uses lenses to detect the language of a configuration file.

The default lenses can be found /usr/share/augeas/lenses//usr/share/augeas/lenses/dist or you have a look at the online documentation stock lenses.

augeas official homepage has a small quick tour which also gives you same samples and useful information.

Installation

If you are running debian, you can simply install it with apt

$ apt install augeas-tools

Of course you can install it via several other methods as well, this link will bring you to the download page of augeas.

And if you have

puppetinstalled on your system, you could even use it to perform commands withaugeaswithout installing any additional package. All what you need is to add the module augeas_core and call the class according to your needs.

The augeas-tools package installs three tools for you:

- augmatch: inspect and match contents of configuration files

- augparse: execute

augesmodule - augtool: full control of

augeas

augmatch

augmatch prints the tree that augeas generates by parsing a configuration file, or only those parts of the tree that match a certain path expression.

Parsing is controlled by lenses, many of which ship with augeas.

augmatch to select the correct lens for a given file automatically unless one is specified with the --lens option.

augmatch Parameters

| Parameters | Description |

|---|---|

[-e/--exact] | Only print the parts of the tree that exactly match the expression provided with --match and not any of the descendants of matching nodes |

[-L/--print-lens] | Print the name of the lens that will be used with the given file and exit |

[-m/--match] <expr> | Only print the parts of the tree that match the path expression expr. All nodes that match expr and their descendants will be printed |

[-o/--only-value] | Only print the value |

[-q/--quiet] | Do not print anything. Exit with zero status if a match was found |

augparse

Execute an augeas module, most commonly to evaluate the tests it contains during the development of new lenses/modules.

augtool

augeas is a configuration editing tool. It parses configuration files in their native formats and transforms them into a tree. Configuration changes are made by

manipulating this tree and saving it back into native config files.

augtool provides a command line interface to the generated tree. COMMAND can be a single command as described under augtool Commands.

When called with no COMMAND, it reads commands from standard input until an end-of-file is encountered.

augtool Commands

This is a small list of available regular used commands:

Admin commands

| Command | Description |

|---|---|

help | shows help ;) |

save | Save all pending changes to disk. Unless either the -b or -n command line options are given, files are changed in place |

Read commands

| Command | Description |

|---|---|

ls <path> | list direct child’s of path |

match <path pattern> [value] | lists paths which matches path pattern allows value filter |

print <path> | prints all childes of path, if no path given, it prints all system wide paths |

Write commands

| Command | Description |

|---|---|

rm <path> | deletes path and all its children from the tree |

set <path> <value> | sets the value in path, if the path is not in the tree yet, it and all its ancestors will be created |

touch <path> | creates path with null value in tree |

Samples

augtool match

This will find all paths that match the path pattern and if you add a value it will filter the result with this as well.

$ augtool match "/files/etc/ssh/sshd_config/*/" yes

/files/etc/ssh/sshd_config/PubkeyAuthentication

/files/etc/ssh/sshd_config/UsePAM

/files/etc/ssh/sshd_config/PrintLastLog

/files/etc/ssh/sshd_config/TCPKeepAlive

augtool print

Use the print command to list all paths and values which matches a path pattern:

$ augtool print "/files/etc/sudoers/spec[1]/host_group/command"

/files/etc/sudoers/spec[1]/host_group/command = "ALL"

/files/etc/sudoers/spec[1]/host_group/command/runas_user = "ALL"

/files/etc/sudoers/spec[1]/host_group/command/runas_group = "ALL"

augtool last value or item

If you don’t know how long a array is, you can use for example the internal command last() to operate on the last value or item

$ augtool print "/files/etc/hosts/*/alias[last()]"

/files/etc/hosts/1/alias = "local_dude"

/files/etc/hosts/2/alias = "my_second_dude"

/files/etc/hosts/3/alias = "my_third_dude"

augtool set

To modify values, you use the command set followed by the path and the new value. If the path does not exists, it will be generated.

$ augtool set "/files/etc/puppetlabs/puppetserver/conf.d/puppetserver.conf/@hash[. = 'http-client']/@array[. = 'ssl-protocols']/1" "TLSv1.3"

puppet augeas

As I have mentioned at the top of the documentation, you can control augeas with puppet as well, this will do the same as the above set sample

augeas { 'puppetserver.conf_augeas_tls':

context => '/files/etc/puppetlabs/puppetserver/conf.d/puppetserver.conf',

changes => [

"set @hash[. = 'http-client']/@array[1] 'ssl-protocols'",

"set @hash[. = 'http-client']/@array/1 'TLSv1.3'",

],

notify => Service['puppetserver'],

}

augeas { "sshd_config":

changes => [ "set /files/etc/ssh/sshd_config/PermitRootLogin no", ],

}

augeas { "sshd_config":

context => "/files/etc/ssh/sshd_config",

changes => [ "set PermitRootLogin no", ],

augeas { "export foo":

context => "/files/etc/exports",

changes => [

"set dir[. = '/foo'] /foo",

"set dir[. = '/foo']/client weeble",

"set dir[. = '/foo']/client/option[1] ro",

"set dir[. = '/foo']/client/option[2] all_squash",

],

}

PA paths for numbered items

augeas { "localhost":

context => "/files/etc/hosts",

changes => [

"set *[ipaddr = '127.0.0.1']/canonical localhost",

"set *[ipaddr = '127.0.0.1']/alias[1] $hostname",

"set *[ipaddr = '127.0.0.1']/alias[2] $hostname.domain.com",

],

}

augeas { "sudojoe":

context => "/files/etc/sudoers",

changes => [

"set spec[user = 'joe']/user joe",

"set spec[user = 'joe']/host_group/host ALL",

"set spec[user = 'joe']/host_group/command ALL",

"set spec[user = 'joe']/host_group/command/runas_user ALL",

],

}

PA loading generic lense for non standard files

augeas { "sudoers":

lens => "Sudoers.lns",

incl => "/foo/sudoers",

changes => "...",

}

Docu review done: Wed 31 Jul 2024 02:07:49 PM CEST

avconv

Table of content

General

avconv is used to convert audio files

samples ogg to mp3

single file convert

$ avconv -i inputfile.ogg -c:a libmp3lame -q:a 2 destination.mp3

convert multible files from ogg to mp3

$ for f in ./* ; do avconv -i $f -c:a libmp3lame -q:a 2 $(echo $f | sed -e 's/.ogg/.mp3/') ; done ; rm *.ogg

Docu review done: Wed 31 Jul 2024 02:08:08 PM CEST

bind

Table of Content

Setup

$ apt install bind9

bind9 cache

To interact with the cache of bind9/named you will use the binary rndc.

dump cache

To dump the cache, use the parameter dumpdb -cache for rndc

$ rndc dumpdb -cache

This will return no output, but it will create a file with path /var/cache/bind/named_dump.db

This file can be opend with any fileviewer you like hust,vim,hust ;) or of course parsed with grep, sed, …

flush cache

specific record

If you know the record name you can also just flush the cache only for the specific record like this:

$ rndc flushname <recordname>

Or if you want to flush also all records below that name, you can use this:

$ rndc flushtree <recordname>

A sample for the above mentioned comments:

$ rndc flushname google.at

$ rndc flushtree google.at

full cache

To flush the cache of bind, who would expect it coming, just flush it

$ rndc flush

But now you should reload the data ;)

$ rndc reload

If everything is fine, you should see the words server reload successful

Docu review done: Mon 03 Jul 2023 16:16:06 CEST

byzanz

Table of Content

General

byzanz is a desktop recorder and command line tool allowing you to record your current desktop or parts of it to an animated GIF, Ogg Theora, Flash or WebM. This is especially useful for publishing on the web.

byzanz also allows recording of audio, when the output format supports it.

Installation

If you are running debian, than it will be easy for you, just use apt

$ apt install byzanz

Usage

To start the recording, run

$ byzanz-record [OPTOIN...] </path/output_file>

And to stop the recording, just wait till the time specified with -d/--duration (default 10 seconds) are over

or

You can just specify instead of -d the parameter -e <command>. The capture will run till the <command> finished.

Capture

The capture is done with the binary byzanz-record which offers you some parameters/options to specify what you need:

| Parameter | Description |

|---|---|

-?/--help | Show help options |

--help-all | Show all help options |

--help-gtk | Show GTK+ Options |

-d/--duration=SECS | Duration of animation (default: 10 seconds) |

-e/--exec=COMMAND | Command to execute and time |

--delay=SECS | Delay before start (default: 1 second) |

-c/--cursor | Record mouse cursor |

-a/--audio | Record audio |

-x/--x=PIXEL | X coordinate of rectangle to record |

-y/--y=PIXEL | Y coordinate of rectangle to record |

-w/--width=PIXEL | Width of recording rectangle |

-h/--height=PIXEL | Height of recording rectangle |

-v/--verbose | Be verbose |

--display=DISPLAY | X display to use |

X and Y

As you know, your screens have x and y coordinates.

0(x),0(y) is the top left corner of your most left defined screen.

x ---------->

┌─────────────┐┌─────────────┐

y │ ││ │

¦ │ ││ │

¦ │ Screen1 ││ Screen2 │

¦ │ ││ │

v │ ││ │

└─────────────┘└─────────────┘

Sample with 1920x1080 resolution

Screen1 goes from 0,0 till 1920,1080

Screen2 goes from 1921,0 till 3841,1080

Sample capture

This will start from

$ byzanz-record -x 0 -y 40 -w 500 -h 500 ./temp/zzzzz.gif

xy from full window with xwininfo

If you are lazy to type the coordinates and the highs, you could use something like xwininfo to get for you the informaion and put it in a small script.

This will fully capture a window, does not metter which size. It will open a vim and keeps recording it untill you close it ;)

$!/bin/bash

dwininfo_data=$(xwininfo)

declare -A xwin_data=(

["x"]="$(awk -F: '/Absolute upper-left X/{print $2}' <<<"${xwininfo_data}")"

["y"]="$(awk -F: '/Absolute upper-left Y/{print $2}' <<<"${xwininfo_data}")"

["w"]="$(awk -F: '/Width/{print $2}' <<<"${xwininfo_data}")"

["h"]="$(awk -F: '/Height/{print $2}' <<<"${xwininfo_data}")"

)

notify-send -u low -t 5000 "capture starts in 5 seconds"

sleep 5

notify-send -u critical -t 5000 "starting capture"

byzanz-record -e vim -x ${xwin_data["x"]} -y ${xwin_data["y"]} -w ${xwin_data["w"]} -h ${xwin_data["h"]} ./$(date +"%F_%T").gif

clevis

Table of Content

General

clevis is a framework for automated decryption policy. It allows you to define a policy at encryption time that must be satisfied for the data to decrypt. Once this policy is met, the data is decrypted.

Installation

Debian

$ apt install clevis

There are some integrations for clevis which can be right handy, for example:

- clevis-dracut

- clevis-initramfs

- clevis-luks

- clevis-systemd

- clevis-tpm2

- clevis-udisk2

Arch Linux

$ pacman -Syu clevis

Setup

For FullDisk encryption

performed beneath Debian 13/Trixie with UEFI and initramfs

So first of all, we have to install clevis as shown above and we also want to install clevis-initramfs and clevis-luks for our use case.

To add tang/clevis as a new encyption slot in your luks partition, you can use this command:

$ clevis luks bind -d /dev/<diskpartition> tang '{"url": "http://<your tang server>:<tang server port>"}'

Enter existing LUKS password:

The advertisement contains the following signing keys:

63rlX6JxefzIaf15K8eh1oCc_5u5f8Cd0fgKnTd6Ujc

Do you wish to trust these keys? [ynYN]

This will ask your for your encyption password and after you have enter that one (successfully), you will see a new keyslot used, which can be done with:

$ cryptsetup luksDump /dev/<diskpartition>

Next we configure initramfs and add the needed binaries to it.

First lets create the file /etc/initramfs-tools/scripts/local-top/run_net which will contain:

#!/bin/sh

. /scripts/functions

configure_networking

This will ensure that we will reload the network configuration while running the initramfs.

Next, we add a hook /usr/share/initramfs-tools/hooks/curl for add curl binary and certificates to the initramfs:

#!/bin/sh -e

PREREQS=""

case $1 in

prereqs) echo "${PREREQS}"; exit 0;;

esac

. /usr/share/initramfs-tools/hook-functions

#copy curl binary

copy_exec /usr/bin/curl /bin

#fix DNS lib (needed for Debian 11)

cp -a /usr/lib/x86_64-linux-gnu/libnss_dns* "${DESTDIR}/usr/lib/x86_64-linux-gnu/"

#DNS resolver

echo "nameserver <YOUR DNS SERVER IP>\n" > "${DESTDIR}/etc/resolv.conf"

#copy ca-certs for curl

mkdir -p "${DESTDIR}/usr/share"

cp -ar /usr/share/ca-certificates "${DESTDIR}/usr/share/"

cp -ar /etc/ssl "${DESTDIR}/etc/"

To load the correct driver/module into initramfs for your network card you need to add it to /etc/initramfs-tools/modules like it is described in the file itself.

After you are done with that, configure /etc/initramfs-tools/initramfs.conf by adding these two lines:

Device=<contains your network interface name>

IP=<your client static ip>::<your network gateway>:<your network mask>::<your network interface name, same as in Device>

if you use DHCP and you need to use this IP value instead:

IP=:::::<your network interface name, same as in Device>:dhcp

As final step, you need to update your initramfs by running:

$ update-initramfs -u -k "all"

If you want to check if everything got stored on the initramfs use the command lsinitrd to do so:

$ lsinitrd /boot/initramfs-<version>.img | grep 'what you are looking for'

For local luks container files

Make sure, you have install clevis, clevis-luks and clevis-systemd installed on your system.

To add tang/clevis as a new encyption slot in your luks file container, you can use this command:

$ clevis luks bind -d /path/to/luks/container tang '{"url": "http://<your tang server>:<tang server port>"}'

Enter existing LUKS password:

The advertisement contains the following signing keys:

63rlX6JxefzIaf15K8eh1oCc_5u5f8Cd0fgKnTd6Ujc

Do you wish to trust these keys? [ynYN]

This will ask your for your encyption password and after you have enter that one (successfully), you will see a new keyslot used, which can be done with:

$ cryptsetup luksDump /path/to/luks/container

To test if your setup is working, you can use the command:

$ clevis luks unlock -d /path/to/luks/container -n <name_of_unlocked_device>

If that command runs through without issues, you will be able to mount the open luks container using /dev/mapper/<name_of_unlocked_device> as device path. You will also be able to see the loopback device now with lsblk.

If you want to close it again, just use cryptsetup luksClose /path/to/luks/container (don’t forget to unmount if you have it mounted.

Why do we specify the paramerter

-n <name_of_unlocked_device>during theclevis luks unlockrun? It is because if you do not specify it, you will get a device name with format ofluks-<uuid>which is a pain to automate and not human readable.

If you want now your system to mount it after the boot is done, you will need to do two more things.

First, you need to adopt /etc/crypttab like so:

# <target name> <source device> <key file> <options>

luksname1 /data/mylukscont none luks,clevis,noearly,_netdev

According to some forums, you need

clevisand_netdevin there.I have added

noearlyoption to not impact the boot process

The next step is to enable the unit clevis-luks-askpass.path

$ systemctl enable clevis-luks-askpass.path

For me this still was not autounlocking it (tested with and without

noearlyoption)Then I found in some forums, that they have added the following attributes to the

clevis-luks-askpass.service:[Unit] Wants=network-online.target After=network-online.targetThis still not allowed me to autounlock it.

In theory it can be tested using the command

cryptdisks_start <target name from crttab>, but that is not confirmed.The man page of

clevis-luks-unlockers(man clevis-luks-unlockers) points out, that for late boot unlocking, you need to enable theclevis-luks-askpass.pathas mentioned before and that after the reboot, clevis will try to unlock all device listed in/etc/crypttabwhich have clevis bindings when sytemd prompts for the pwd.I was not able to confirm that, but will keep working on it, till now it is seen as NOT WORKING WITH AUTO UNLOCK.

Tang did a rekey lets rekey clevis

If you are using a tang server to automatically unlock your disk for example, it might happen that a rekey was performed.

To be able to deal with that, check which slot is used for tang in the luks parition and perform a clevis luks report like this:

$ clevis luks list -d /dev/sda2

1: tang '{"url":"http://localhost:8080"}'

$ clevis luks report -d /dev/sda2 -s 1

...

Report detected that some keys were rotated.

Do you want to regenerate luks metadata with "clevis luks regen -d /dev/sda2 -s 1"? [ynYN]

If you hit there [y|Y] it will enable the new key created by the tang key rotation.

What you can do as well, is to execute this one:

$ clevis luks regen -d /dev/sda2 -s 1

Docu review done: Wed 31 Jul 2024 02:09:25 PM CEST

Comparetools

meld

grafical application to compare folders and files it can compare also in a threway as well

Docu review done: Wed 31 Jul 2024 02:09:45 PM CEST

cpufreq

General

Utilities to deal with the cpufreq Linux kernel feature

Commands

| Command | Description |

|---|---|

cpufreq-info | Shows cpu informations |

cpufreq-set -g powersave | Sets governors to powersave |

cpufreq-set -g performance | Sets governors to performance |

Docu review done: Wed 31 Jul 2024 02:10:06 PM CEST

csync2

Table of Content

General

csync2 is a bidirectional sync tool on file base

Installation

$ apt install csync2

Setup and initialize

First thing is, that you need an tuhentication key

$ csync2 -k /etc/csync2.key

For the transfer it selfe, you need to generate a ssl cert as well

$ openssl genrsa -out /etc/csync2_ssl_key.pem 2048

$ openssl req -batch -new -key /etc/csync2_ssl_key.pem -out /etc/csync2_ssl_cert.csr

$ openssl x509 -req -days 3600 -in /etc/csync2_ssl_cert.csr -signkey /etc/csync2_ssl_key.pem -out /etc/csync2_ssl_cert.pem

To configure csync2 a small config file /etc/csync2/csync2.cfg is needed, where you define the hosts, keys and so on

group mycluster

{

host node1;

host node2;

key /etc/csync2.key;

include /www/htdocs;

exclude *~ .*;

}

Transfer config and certs/key to all the other nodes which are in your csync2 cluster and ensure that the service/socket is enabled and started.

$ sytstemctl enable --now csync2.socket

After the sockes are available everywhere, you can start the inintial sync.

$ csync2 -xv

Conflict handling

If csync2 detected changes during a sync by one or more hosts, you will get messages like this

While syncing file /etc/ssh/sshd_conf:

ERROR from peer site-2-host-1: File is also marked dirty here!

Finished with 1 errors.

To resolve the conflict, connect to the hosts were you know the correct file is located at and execute the following

$ csync2 -f /etc/ssh/sshd_conf

$ csync2 -x

Resetting a csync2 cluster

Resolves the following errors:

ERROR from peer 10.0.0.1: File is also marked dirty here!Database backend is exceedingly busy => Terminating (requesting retry).ERROR from peer 10.0.0.1: Connection closed.

First of all, make sure that csync2 process is running.

Then connect to all servers, and update the csync2 database.

$ csync2 -cIr /

IMPORTANT only perform the next steps on the master

Go to the server with the new files or corrected state (may be master =) Get all differences between master and slaves and mark for sync

$ csync2 -TUXI

Reset database on master to winn all conflicts and sync data to all slaves

$ csync2 -fr /

$ csync2 -xr /

Connect now again to all other servers too and run to check the sync state

$ csync2 -T

Docu review done: Wed 31 Jul 2024 02:11:55 PM CEST

darktable

Table of content

Darktable CLI

With the installation of darktable also the darktable-cli gets installed.

The darktable-cli offers a basic interaction with raw files, for example the convertion from .raw to .jpg, .png and so on

$ darktable-cli <RAWFILE> <OUTPUTFILE> --core --conf plugins/imageio/format/<FORMAT>/<SETTINGS>

For example, a conversion from .nef to .jpg using high quality resampling

$ darktable-cli DSC_0566.NEF ./JPEG/DSC_0566.jpg --core --conf plugins/imageio/format/jpeg/quality=100 --hq true

Docu review done: Wed 31 Jul 2024 02:11:47 PM CEST

dialog

General

dialog will spawn a dialog box to allows you enter some value which places the string into a file and than back into a variable

Sample

$ dialog --title "Start tracking" --backtitle "Start tracking" --inputbox "${timetotrackon}" 100 60 2>${outputfile} ; timetotrackon=$(<${outputfile})

[Docu with samples][https://bash.cyberciti.biz/guide/The_form_dialog_for_input]

Docu review done: Wed 31 Jul 2024 02:11:38 PM CEST

Dict Leo

Table of Content

Useage

| Comands | Description | Options |

|---|---|---|

leo [string] | searches for translation in english or german leos dictionary | |

leo -s [options] [stirng] | allowing of spell errors | [standard], on or off |

leo -c [options] [string] | allow umlaut alternatives | fuzzy, exact or [relaxed] |

leo -l [options] [string] | sets language | de/german, en/english, es/spanish, fr/french, ru/russian, pt/portuguese, pl/polish or ch/chinese |

leo -n [string] | don’t use escapes for highlighting | Default: do highlighting |

leo -f [string] | don’t use the query cache | Default: use the cache |

Sample

$ leo nerd

Found 7 matches for 'nerd' on dict.leo.org:

Nouns

nerd die Langweilerin

nerd der Computerfreak

nerd die Fachidiotin

nerd der Schwachkopf

nerd hochintelligente, aber kontaktarme Person

nerd der Sonderling

nerd die Streberin

$ leo -l es wilkommen

Found 11 matches for 'willkommen' on dict.leo.org:

Nouns

la bienvenida der Willkommen

Adjectives/Adverbs

bienvenida willkommen

Phrases/Collocations

¡Bienvenida! Herzlich Willkommen!

¡Bienvenido! Herzlich Willkommen!

¡Bienvenidas! Herzlich Willkommen!

¡Bienvenidos! Herzlich Willkommen!

¡Bienvenidos a bordo! Willkommen an Bord!

¡Bienvenidos a Alemania! Willkommen in Deutschland!

¡Bienvenido a casa! Willkommen zu Hause!

Verbs

dar la bienvenida a alguien jmdn. willkommen heißen

recibir a alguien jmdn. willkommen heißen

# same as above but specify the direction of translation

$ leo -l de2es willkommen

Installation

$ apt install libwww-dict-leo-org-perl

URLs

Docu review done: Wed 31 Jul 2024 02:11:33 PM CEST

docker

General

docer interact with docker containers,nodes,…

Troubleshooting

$ docker commit <DockerID|Docername> <debugimagename> # creates new docker image from broken container

Debugging docker containers

$ docker commit apacheserver debug/apache # creates new docker image from broken container

$ docker run -it --entrypoint=/bin/sh debug/apache # starts only docker container root process

$ docker stats # like a top for docker processes

Docu review done: Wed 31 Jul 2024 02:12:11 PM CEST

easytag

General

easytag is an standard audio tag manipulation software with standard quality.

cons: It very often detects changes as it tried to automatically update tags based on matches on the internet

Docu review done: Wed 31 Jul 2024 02:12:42 PM CEST

exiftool

Table of content

General

exiftool allows you to modify the metadata from picutes

Installation

This application can be installed with apt

$ apt install libimage-exiftool-perl

Commands

| Commands | Descriptions |

|---|---|

--list | lists all metadata from file |

-o OUTFILE (-out) | Set output file or directory name |

-overwrite_original | Overwrite original by renaming tmp file |

-P | Preserve file modification date/time |

-r | Recursively process subdirectories |

-scanForXMP | Brute force XMP scan |

-all= | clears all metadata |

Samples

List metadata about files

$ exiftool --list IMG_20200329_002001.jpg

ExifTool Version Number : 12.00

File Name : IMG_20200329_002001.jpg

Directory : .

File Size : 5.8 MB

File Modification Date/Time : 2020:03:29 00:30:16+01:00

File Access Date/Time : 2020:06:30 11:06:24+02:00

File Inode Change Date/Time : 2020:06:30 11:05:32+02:00

File Permissions : rw-r--r--

File Type : JPEG

File Type Extension : jpg

MIME Type : image/jpeg

Image Width : 5472

Image Height : 7296

Encoding Process : Baseline DCT, Huffman coding

Bits Per Sample : 8

Color Components : 3

Y Cb Cr Sub Sampling : YCbCr4:2:0 (2 2)

Image Size : 5472x7296

Megapixels : 39.9

Removes all tabgs from all files found by *.jpg including subdirs

$ exiftool -r -overwrite_original -P -all= *.jpg

URL

Docu review done: Mon 03 Jul 2023 16:34:12 CEST

fdupes

Table of Content

General

fdupes finds duplicate files in a given set of directories

Searches the given path for duplicate files. Such files are found by comparing file sizes and MD5 signatures, followed by a byte-by-byte comparison.

Install

$ apt install fdupes

Parameters

| Parameters | Description |

|---|---|

-r /path/to/dir1 /path/to/dir2 [/path3,...] | for every directory given follow subdirectories encountered within |

-R: /path/to/dir1 /path/to/dir2 [/path3,...] | for each directory given after this option follow subdirectories encountered within |

-s | follows symlinked directoires |

-H | normally, when two or more files point to the same disk area they are treated as non-duplicates; this option will change this behavior |

-n | exclude zero-length files from consideration |

-A | exclude hidden files from consideration |

-S | show size of duplicate files |

-m | summarize duplicate files information |

-q | quite |

-d | prompt user for files to preserve, deleting all others |

-N | when used together with -d, preserve the first file in each set of duplicates and delete the others without prompting the user |

-I | delete duplicates as they are encountered, without grouping into sets; implies -H |

-p | don’t consider files with different owner/group or permission bits as duplicates |

-o [time/name] | order files according to WORD: time - sort by mtime, name - sort by filenam |

-i | reverse order while sorting |

-1 | outputs each duplicates mach in one line |

Examples

# the quer files differes between test1 and test2 dir

$ md5sum test*/*

b026324c6904b2a9cb4b88d6d61c81d1 test1/1

31d30eea8d0968d6458e0ad0027c9f80 test1/10

26ab0db90d72e28ad0ba1e22ee510510 test1/2

6d7fce9fee471194aa8b5b6e47267f03 test1/3

e760668b6273d38c832c153fde5725da test1/4

1dcca23355272056f04fe8bf20edfce0 test1/5

9ae0ea9e3c9c6e1b9b6252c8395efdc1 test1/6

84bc3da1b3e33a18e8d5e1bdd7a18d7a test1/7

c30f7472766d25af1dc80b3ffc9a58c7 test1/8

9e6b1b425e8c68d99517d849d020c8b7 test1/9

d8016131a2724252b2419bf645aab221 test1/qwer

b026324c6904b2a9cb4b88d6d61c81d1 test2/1

31d30eea8d0968d6458e0ad0027c9f80 test2/10

26ab0db90d72e28ad0ba1e22ee510510 test2/2

6d7fce9fee471194aa8b5b6e47267f03 test2/3

e760668b6273d38c832c153fde5725da test2/4

1dcca23355272056f04fe8bf20edfce0 test2/5

9ae0ea9e3c9c6e1b9b6252c8395efdc1 test2/6

84bc3da1b3e33a18e8d5e1bdd7a18d7a test2/7

c30f7472766d25af1dc80b3ffc9a58c7 test2/8

9e6b1b425e8c68d99517d849d020c8b7 test2/9

2b00042f7481c7b056c4b410d28f33cf test2/qwer

$ fdupes -ri1 ./test1 ./test2

test2/7 test1/7

test2/9 test1/9

test2/3 test1/3

test2/1 test1/1

test2/2 test1/2

test2/4 test1/4

test2/10 test1/10

test2/6 test1/6

test2/8 test1/8

test2/5 test1/5

ffmpeg

general notes on ffmpeg

loops

ffmpeg consumes stdin and acts weird if you loop for example over a list of files provided by stdin.

solution:

while read -r file; do

cat /dev/null | ffmpeg -i "${file}" "${file}.new"

done <./toEncode.lst

Convert commands with ffmpeg

Amazone offers the files in default as m4a files

$ ffmpeg -i Weihnachtsklingel-6G8_sdQ26MY.m4a -vn -ab 320k -ar 44100 Weihnachtsklingel.mp3

Converts all flac files to mp3 and keeps metat data (cover art not working)

$ for f in $(ls -1 *.flac) ; do ffmpeg -i "${f}" -vn -map_metadata 0 -id3v2_version 3 -ab 320k -ar 44100 "$(sed -E 's/\.flac/\.mp3/g' <<<"${f}")" ; done

Converts all mp3 files to flac

$ while read line ; do yes | ffmpeg -i "$line" "$(echo "$line" | sed -e 's/mp3/flac/g')" -vsync 2 ; done<<<$(find ./ -name "*mp3")

Converts $file into avi thats working on bones Kid Car TVs

$ ffmpeg -i "${file}" -vf scale=720:-1 -b:v 1.2M -vcodec mpeg4 -acodec mp3 "${destination:-.}/${file/%${file##*\.}/avi}"

Changing video rotation or flip it

flip video vertically

$ ffmpeg -i inputfile -vf vflip -c:a copy outputfile

flip video horizontally

$ ffmpeg -i inputfile -vf hflip -c:a copy outputfile

rotate 90 degrees clockwise

$ ffmpeg -i inputfile -vf transpose=1 -c:a copy outputfile

rotate 90 degrees counterclockwise

$ ffmpeg -i inputfile -vf transpose=2 -c:a copy outputfile

Docu review done: Wed 31 Jul 2024 02:13:10 PM CEST

fio

Table of content

Installation

$ apt install fio

Testing IOPS

RW performance

The first test is for measuring random read/write performances. In a terminal, execute the following command:

$ fio --randrepeat=1 --ioengine=libaio --direct=1 --gtod_reduce=1 --name=test --filename=random_read_write.fio --bs=4k --iodepth=64 --size=4G --readwrite=randrw --rwmixread=75

During the test, the terminal window will display an output like the following one:

test: (g=0): rw=randrw, bs=4K-4K/4K-4K/4K-4K, ioengine=libaio, iodepth=64

fio-2.2.8

Starting 1 process

test: Laying out IO file(s) (1 file(s) / 4096MB)

Jobs: 1 (f=1): [m(1)] [0.1% done] [447KB/131KB/0KB /s] [111/32/0 iops] [eta 01h:Jobs: 1 (f=1): [m(1)] [0.1% done] [383KB/147KB/0KB /s] [95/36/0 iops] [eta 01h:4Jobs: 1 (f=1): [m(1)] [0.1% done] [456KB/184KB/0KB /s] [114/46/0 iops] [eta 01h:Jobs: 1 (f=1): [m(1)] [0.1% done] [624KB/188KB/0KB /s] [156/47/0 iops] [eta 01h:Jobs: 1 (f=1): [m(1)] [0.1% done] [443KB/115KB/0KB /s] [110/28/0 iops] [eta 01h:Jobs: 1 (f=1): [m(1)] [0.1% done] [515KB/95KB/0KB /s] [128/23/0 iops] [eta 01h:4Jobs: 1 (f=1): [m(1)] [0.1% done] [475KB/163KB/0KB /s] [118/40/0 iops] [eta 01h:Jobs: 1 (f=1): [m(1)] [0.2% done] [451KB/127KB/0KB /s] [112/31/0 iops]

So, the program will create a 4GB file (--size=4G), and perform 4KB reads and writes using three reads for every write ratio (75%/25%, as specified with option --rwmixread=75), split within the file, with 64 operations running at a time. The RW ratio can be adjusted for simulating various usage scenarios.

At the end, it will display the final results:

test: (groupid=0, jobs=1): err= 0: pid=4760: Thu Mar 2 13:23:28 2017

read : io=7884.0KB, bw=864925B/s, iops=211, runt= 9334msec

write: io=2356.0KB, bw=258468B/s, iops=63, runt= 9334msec

cpu : usr=0.46%, sys=2.35%, ctx=2289, majf=0, minf=29

IO depths : 1=0.1%, 2=0.1%, 4=0.2%, 8=0.3%, 16=0.6%, 32=1.2%, >=64=97.5%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.1%, >=64=0.0%

issued : total=r=1971/w=589/d=0, short=r=0/w=0/d=0, drop=r=0/w=0/d=0

latency : target=0, window=0, percentile=100.00%, depth=64

Run status group 0 (all jobs):

READ: io=7884KB, aggrb=844KB/s, minb=844KB/s, maxb=844KB/s, mint=9334msec, maxt=9334msec

WRITE: io=2356KB, aggrb=252KB/s, minb=252KB/s, maxb=252KB/s, mint=9334msec, maxt=9334msec

Disk stats (read/write):

dm-2: ios=1971/589, merge=0/0, ticks=454568/120101, in_queue=581406, util=98.44%, aggrios=1788/574, aggrmerge=182/15, aggrticks=425947/119120, aggrin_queue=545252, aggrutil=98.48%

sda: ios=1788/574, merge=182/15, ticks=425947/119120, in_queue=545252, util=98.48%

Note from the author: I ran fio on my laptop, so the last output was obtained running the test with a 10MB file; as can be seen above, the 4GB option would have taken more than 1 hour.

Random read performance

In this case, the command is:

$ fio --randrepeat=1 --ioengine=libaio --direct=1 --gtod_reduce=1 --name=test --filename=random_read.fio --bs=4k --iodepth=64 --size=4G --readwrite=randread

The output will be similar to the RW case, just specialized in the read case.

Random write performance

$ fio --randrepeat=1 --ioengine=libaio --direct=1 --gtod_reduce=1 --name=test --filename=random_write.fio --bs=4k --iodepth=64 --size=4G --readwrite=randwrite

As above, in random write case.

Write test sample

$ fio --time_based --filename=./fio-bench-file --direct=1 --rw=write --refill_buffers --ioengine=libaio --bs=128k --size=4G --filesize=4G --iodepth=16 --numjobs=24 --runtime=600 --group_reporting --name=fio_secwrite

Benchmark disk sample

#!/bin/bash

#

# - Required Debian packages: bonnie++ fio libaio1

#

CPUS=$(cat /proc/cpuinfo | grep processor | wc -l)

RAM=$(free -m | grep 'Mem:' | perl -ne '/Mem:\s+(\d+)/; print $1')

BLOCK_COUNT=$(echo "scale=0;(${RAM} * 1024) * 4.25 / 8"|bc -l) # (RAM = in MB, so * 1024) * 4.25 / 8k blocksize

RAM_BYTES=$(echo "scale=0; $RAM * 1024 * 1024" | bc -l)

FIO_BENCH_SIZE=$(echo "scale=0; $RAM_BYTES * 4.25" | bc -l)

FIO_CPUS=$(echo "scale=0; $CPUS * 2" | bc -l)

logfile="bench_disk_$$.log"

openlog() {

exec 6>&1

exec 7>&2

exec >> $logfile 2>&1

}

closelog() {

# Close the file descriptors

exec 1>&6 6>&-

exec 2>&7 7>&-

}

# disable oom killer for this process

echo -17 > /proc/$$/oom_adj

openlog

echo "Number of CPUs: $CPUS"

echo "Available RAM : $RAM"

echo "Available RAM : $RAM_BYTES bytes"

echo "Block Size : $BLOCK_COUNT"

closelog

TEST_DIR=${1:-/data/postgres/testdir}

BENCH1="bench01"

BENCH2="bench02"

BENCH3="bench03"

BENCH4="bench04"

BENCH5="bench05"

openlog

echo "Test directory: $TEST_DIR"